The world of IT operations is facing a complexity crisis. As applications become collections of tiny, interdependent microservices that are often spread across multiple clouds, the sheer volume of operational data is overwhelming. We’ve moved beyond simple monitoring (Is the service up?) to observability (Why is the service slow?)

Now, the newest trend is leveraging GenAI to transform this mountain of data into instant, actionable intelligence: AIOps. But to talk to your system like a true expert, the AI needs a clean, standardized, and unified data stream. That’s where OpenTelemetry (OTel) becomes the essential foundation.

The Data Silo Problem: Why AI Gets Confused?

Historically, observability data fell into three distinct buckets, each with its own format and tool:

- Metrics: Time-series numbers (CPU usage, request rate).

- Logs: Text records of discrete events (error messages, status updates).

- Traces: The path a single request takes across services.

When these are separate, a human engineer has to manually correlate them—like comparing three different binders of evidence. When a GenAI model is fed this siloed data, it struggles to connect the dots:

- “The database latency spiked (Metric), but what caused it?”

- “I see an error log, but where did that request come from and which service did it touch?”

This is why GenAI-powered AIOps need a unified data language.

OpenTelemetry: The Universal Translator for Observability

OpenTelemetry (OTel) is an open-source standard designed to solve this problem. It provides a vendor-agnostic framework for collecting all three signals (Metrics, Logs, and Traces) using a single set of APIs and SDKs, all under a consistent data schema.

How OTel Powers GenAI

For GenAI to perform its magic, the data must be structured and contextually linked. OTel achieves this by:

- Standardizing Context: When OTel instruments an application, it automatically injects Trace IDs and other relevant metadata into every log and metric. This ensures that the log entry for an error and the metric for the high CPU usage are mathematically linked to the exact same transaction.

- Rich Data for LLMs: A Large Language Model (LLM) thrives on context. OTel’s standardized data allows the LLM to ingest a complete picture

- Future-Proofing GenAI Observability: As organizations integrate GenAI models (like using an LLM for conversational AI), OTel is building new Semantic Conventions to capture AI-specific data like:

- Token Usage: Crucial for monitoring LLM costs.

- Model Latency: Tracking the performance of the AI model itself.

- Prompt/Response Tracing: Tracking the journey of an AI query.

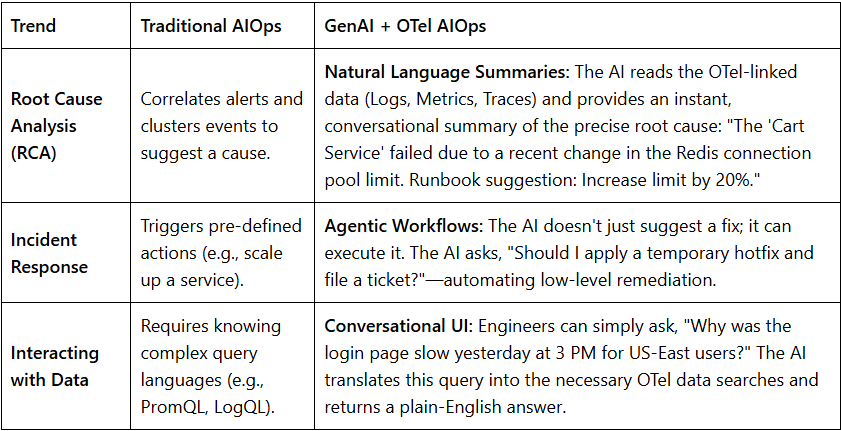

The AIOps Evolution: From Alerts to Conversations

With OTel as the common language, GenAI-powered AIOps is introducing the latest disruptive trends:

OpenTelemetry is no longer just a nice-to-have; it’s the pre-requisite for achieving the true promise of AIOps. By unifying the data, OTel gives the next generation of AI the clear, comprehensive vision it needs to move from identifying problems to truly autonomous IT operations.

Further Reads: https://www.openturf.in/open-telemetry-standardizing-observability-cloud-native-world/